My college hosts a professional development day every semester for faculty and staff, and this semester’s is…interesting. There’s a theme for the day, normally something connected loosely with a recent college goal or broad focus. This semester, it’s “Approaching AI with Teamwork and Heart.”

I got this theme in an email along with a call for presentation proposals, so you can bet that I shot a message to a friend on campus, asking if they wanted to co-present something with the following description:

“There are no ethical use cases for generative AI in higher education. In this presentation, we’ll discuss a host of ethical reasons why generative AI tools are not elements of teaching or learning and how the use of generative AI is fundamentally at odds with our students’ agency as well as their humanity.”

I’m going to use this post to collect some notes and references as I prepare for this session (if it gets accepted).

Data Security and Privacy

- Legal experts warn that user-facing generative AI tools should be considered insecure, and that even with confidentiality agreements there is little guarantee that inputted data will remain private.1

- Ed tech companies have failed to keep students’ data secure2 and have been alleged to have harvested and monetized student data without permission.3

- The health care industry, which has been quick to adopt generative AI tools, has seen increased data policy violations directly due to the use of generative AI tools.4

- Generative AI tools collect huge amounts of data on users, and data leaks in generative AI models are typically used to train the model itself, meaning identifying information can be retrieved long after the leaked data is “removed.”5

Training Data and Models

- Training data sets are built from anything searchable on the Internet, including huge amounts of personal information, unverified claims, and intentional misinformation.6

- Because training data includes copyright material, output from generative AI tools can and does include plagiarized material.7

- Institutions are encouraged to develop clear policies about intellectual property,8 and using generative AI tools on any student could violate these policies.

- Bias in generative AI models comes from a mixture of human decisions as well as systemic issues in the data or models themselves, and efforts to “debias” these models are often costly, ineffective, and can even compound the biases.9

- Biases are based on racism,10 gender bias,11 ableism,12 fatphobia,13 anti-Queer bias,14 and political/religious bias.15

- Generative AI models are “black box” models–opaque and uninterpretable–making compliance with privacy policies, protection against biases, and general accountability difficult or impossible.16

- Models rely heavily on human classification for training, leading to workers required to watch abusive content in order to classify violent and abusive outputs.17

Environmental Impact and Energy Use

- Data centers have a high ratio of physical footprint to employment. Large data centers do not scale the number of jobs they create with the space they take.18

- Data centers can use the same amount of water (mostly used for cooling hardware) as a town of 50,000 people and contribute to air quality degradation and public health costs.19

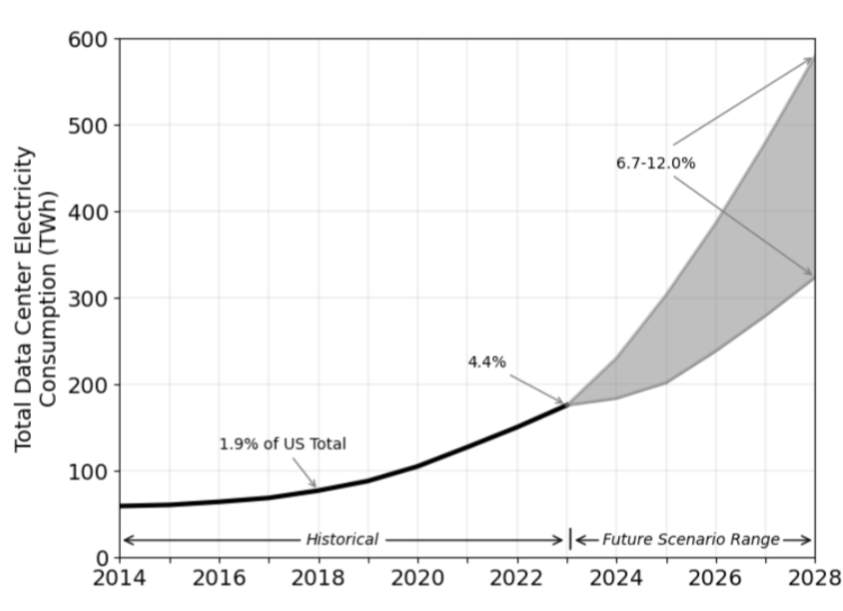

- Electricity usage by data centers is increasing rapidly, putting stress on the energy infrastructure. Updates are now required more often, resulting in outages and increased billing for the general population in those areas.20

Total U.S. data center electricity use from 2014 to 2028.20

Total U.S. data center electricity use from 2014 to 2028.20

- Previous clean or renewable energy usage requirements for data centers have been dismantled, allowing for the increased energy usage to come from energy sources that we know are not sustainable or environmentally friendly.21

- Black Americans are disproportionately harmed by air pollution already,22 and are expected to have an even larger increase in health risks due to the increased demand for data centers.23

User Safety

- Several lawsuits have been filed against OpenAI, alleging that their ChatGPT product has been partially responsible for wrongful death, assisted suicide, manslaughter, and other consumer risks due to negligence.24 These have been so frequent and high-profile, that OpenAI has a public statement on their website detailing their approach to mental-health litigation.25

- Elon Musk’s xAI is under investigation26 due to the amount of non-consensual and sexually explicit images created to harass women on Twitter/X, as well as child sexual abuse material created using the generative AI tool, Grok.27

- Psychologists are concerned about the increase in psychosis connected with generative AI usage.28

- While tech companies claim to have implemented safety features and guard rails for their generative AI tools, there is little certainty that they are actually effective or cannot be circumvented.29

Academic Malpractice

- Use of generative AI tools for assistance in tasks do not lead to statistically significant advantages in completing the tasks, but do lead to statistically significant reductions in retention or understanding of the task.30

- Humans that engage with generative AI tools in their work become less engaged, less motivated, and any efficiency advantages do not remain in longer term contexts.31

- When people use generative AI tools for assistance in tasks, they reduce the effort that they put into the task and do not think critically.32

- In educational settings, generative AI usage leads to short-term gains in lower-cognitive tasks, with decreases in the higher-order cognitive tasks like retention.33

- Ed tech products, while claiming to provide highly tailored assistance for each individual student, is largely useless and has no positive impact on learning. If anything, it has a negative impact on learning.34

Conclusion

Even with this extremely incomplete list, I think it suffices to say that, if this presentation proposal gets accepted, we’ll have enough content to fill the hour.

It is shameful for any academic institution to embrace generative AI tools in any way.

References

-

FTC Takes Action Against Education Technology Provider for Failing to Secure Students’ Personal Data ↩

-

IXL class-action suit advances amid student data harvesting claims ↩

-

Unmasking EdTech’s Surveillance Infrastructure in the Age of AI ↩

-

Your Personal Information Is Probably Being Used to Train Generative AI Models ↩

-

Addressing Bias in Generative AI: Challenges and Research Opportunities in Information Management ↩

-

Covert Racism in AI: How Language Models Are Reinforcing Outdated Stereotypes ↩

-

Trained AI models exhibit learned disability bias, IST researchers say ↩

-

AI is biased in favour of US evangelicalism. It doesn’t have the mind of Christ ↩

-

Generative AI and the imperative for transparent monitoring ↩

-

‘In the end, you feel blank’: India’s female workers watching hours of abusive content to train AI ↩

-

Executive Order: Accelerating Federal Permitting of Data Center Infrastructure ↩

-

Fine Particulate Air Pollution from Electricity Generation in the US: Health Impacts by Race, Income, and Geography ↩

-

Data Center Boom Risks Health of Already Vulnerable Communities ↩

-

Seven more lawsuits filed against OpenAI for ChatGPT manipulation and ‘suicide coaching’ ↩

-

Attorney General Bonta Launches Investigation into xAI, Grok Over Undressed, Sexual AI Images of Women and Children ↩

-

Elon Musk’s Grok AI floods X with sexualized photos of women and minors ↩

-

Researchers Say Guardrails Built Around A.I. Systems Are Not So Sturdy ↩

-

Human-generative AI collaboration enhances task performance but undermines human’s intrinsic motivation ↩

-

The Impact of Generative AI on Critical Thinking: Self-Reported Reductions in Cognitive Effort and Confidence Effects From a Survey of Knowledge Workers ↩

-

Short-Term Gains, Long-Term Gaps: The Impact Of GenAI and Search Technologies On Retention ↩